AI Lobbying surges amidst calls for regulation

The world of artificial intelligence (AI) saw a surge in lobbying activities in 2023, with over 450 organizations participating, marking a staggering 185% increase compared to the previous year. This data, analyzed by OpenSecrets on behalf of CNBC from federal lobbying disclosures, indicates a dramatic escalation in AI-related lobbying efforts.

Rising demand for regulation

The upsurge in AI lobbying activities coincides with heightened calls for the regulation of AI technology and the Biden administration's drive to establish these regulations. Notable companies that engaged in lobbying in 2023 to influence regulations that could impact their operations include TikTok owner ByteDance, Tesla, Spotify, Shopify, Pinterest, Samsung, Palantir, Nvidia, Dropbox, Instacart, DoorDash, Anthropic, and OpenAI.

Diverse industry participation

The organizations involved in AI lobbying in 2023 represented a broad spectrum of industries, spanning from major tech corporations and AI startups to pharmaceuticals, insurance, finance, academia, telecommunications, and more. The surge in AI lobbying reflects a significant shift, as until 2017, the number of organizations reporting AI lobbying efforts remained in the single digits. However, this practice has steadily gained traction in the years since, reaching a zenith in 2023.

New entrants and increased expenditure

Of the 330 organizations that lobbied on AI in 2023, the majority had not engaged in similar activities in 2022. This points to a notable influx of new entrants into AI lobbying. The data also revealed that organizations lobbying on AI issues typically addressed a range of other concerns with the government. In totality, these entities reported a combined expenditure of over $957 million on federal government lobbying in 2023, encompassing various issues beyond AI.

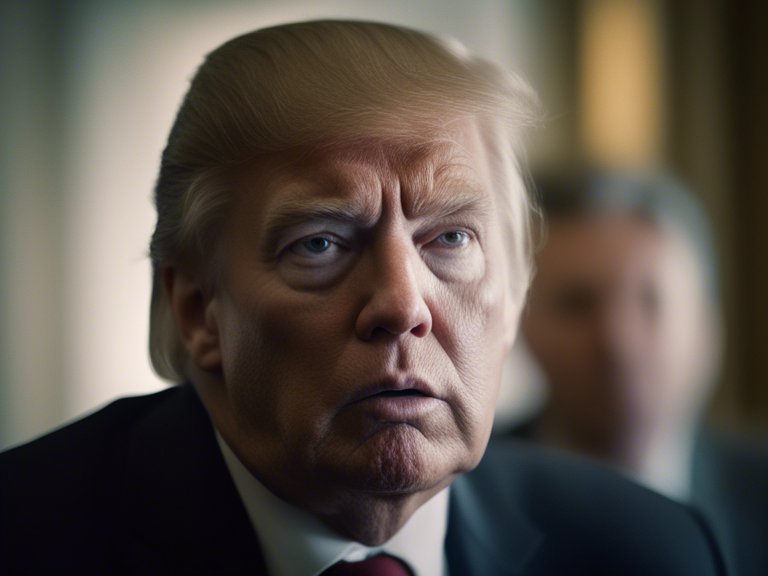

Regulatory developments and public engagement

In October 2023, President Biden issued an executive order on AI, marking the U.S. government's inaugural action of this nature. The order mandated new safety assessments, equity and civil rights guidance, and research on AI's impact on the labor market. It also entrusted the U.S. Department of Commerce's National Institute of Standards and Technology (NIST) with the task of formulating guidelines for evaluating specific AI models and developing consensus-based standards for AI.

Following the executive order, a flurry of lawmakers, industry groups, civil rights organizations, and labor unions delved into the 111-page document, scrutinizing the priorities, deadlines, and wide-ranging implications of this landmark action. A pivotal topic of discussion revolved around AI fairness, with some stakeholders expressing concerns that the order did not adequately address real-world harms stemming from AI models, particularly those affecting marginalized communities.

NIST's role in shaping AI standards

Since December, NIST has been soliciting public feedback from businesses and individuals regarding the best approaches to shape AI regulations. One of the key components of this process involves developing responsible AI standards, AI red-teaming, managing the risks associated with generative AI, and mitigating the proliferation of "synthetic content" such as misinformation and deepfakes.

The future of AI regulation

The surge in AI lobbying activities, coupled with the ongoing efforts to shape AI regulations, underscores the evolving landscape of AI governance and the diverse array of stakeholders involved in this process. As AI technology continues to advance, the calls for robust and equitable regulations reflect the growing recognition of the far-reaching impact of AI across various sectors and the imperative to address its potential ramifications.

Share news