Nvidia Unveils Blackwell AI Chips and Software, Paving Way for AI Advancements

Nvidia introduces Blackwell, a new generation of AI chips and software, aiming to boost performance and accessibility for AI models.

During Nvidia's developer's conference in San Jose, the technology giant announced the launch of its new generation of artificial intelligence chips and software designed for running AI models. This move comes as Nvidia seeks to further solidify its position as the leading supplier for AI companies.

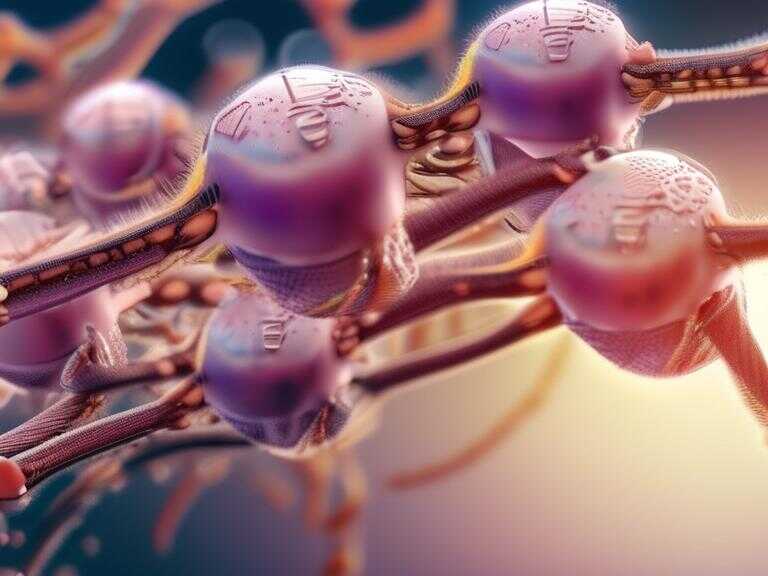

Blackwell: The New Generation of AI Processors

The latest generation of AI graphics processors unveiled by Nvidia is named Blackwell. The first chip in this series, named the GB200, is set to be released later this year. This new line of chips offers significantly enhanced performance, with the GB200 providing 20 petaflops in AI performance, a notable increase compared to the 4 petaflops offered by the H100.

Enhanced Capabilities for AI Companies

The increased processing power of the Blackwell GPU will empower AI companies to train larger and more complex models, thus boosting their capabilities. Moreover, the chip features a "transformer engine" specifically optimized for running transformers-based AI, a core technology essential for models such as ChatGPT.

Cloud Access and Server Solutions

Major tech players including Amazon, Google, Microsoft, and Oracle are set to offer access to the GB200 through their cloud services. Nvidia's server cluster with 20,000 GB200 chips is slated to be built by Amazon Web Services. Additionally, the GB200 will be available as an entire server named the GB200 NVLink 2, featuring 72 Blackwell GPUs and other Nvidia components tailored for AI model training.

Game-Changing Technology

Nvidia's new software, known as NIM, aims to simplify the deployment of AI models, making it feasible to run programs on various Nvidia GPUs, including older models suited for deploying AI. This initiative is geared towards broadening the accessibility and usability of AI technologies by streamlining the process for developers.

Strategic Pricing and Partnerships

While specific pricing details for the GB200 and associated systems were not disclosed, Nvidia's Hopper-based H100 typically costs between $25,000 and $40,000 per chip, with entire systems reaching up to $200,000, as estimated by analysts. Furthermore, Nvidia is collaborating with key AI companies such as Microsoft and Hugging Face to ensure seamless integration of their AI models with compatible Nvidia chips, facilitating efficient utilization across both personal and cloud-based servers.

Expanding Accessibility to AI

In addition to enhancing the performance and accessibility of AI technologies, Nvidia's NIM software is set to enable AI operations on GPU-equipped laptops, extending the reach of these capabilities beyond cloud-based servers.

Share news